本文共 8097 字,大约阅读时间需要 26 分钟。

写在前边的话:

转载请注明出处:@,Thinkagmer 撰写

之前是在自己电脑上部署的hadoop集群,但并未涉及到HA配置,这次将集群迁移到PC服务器,但是问题来了,只有三台,但是我还想配置HA,PC服务器是CentOS6.5,原来想着在上边部署VM,从而部署HA集群,但经测试,未果,遂弃之,就想到了在三台机器上部署HA集群。

hadoop伪分布部署参考: hadoop单机版部署参考: zookeeper,hive,hbase的分布式部署参考: Spark,Sqoop,Mahout的分布式部署参考:

步骤和部署hadoop集群()是一样的,只是这里加入了HA的一些配置,记录如下

关于HA架构的知识请移步该篇博客:

一:架构说明

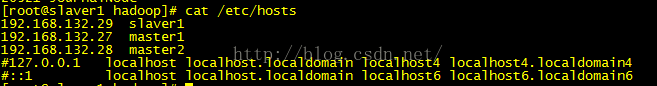

IP hostname role

192.168.132.27 master1 主节点

192.168.132.28 master2 备份主节点

192.168.132.29 slaver1 从节点

zookeeper的三个节点集群,部署在这三台机子上

二:部署Zookeeper

Hadoop HA的部署依赖于ZK来切换主节点,所以在部署Hadoop HA之前需要先把Zookeeper集群搞定,部署参考:

三:部署HA

1:文件配置

除了配置文件mapred-site.xml,core-site.xml,hdfs-site.xml,yarn-site.xml之外和hadoo集群部署一样,这里不做陈述,可参考:

mapred-site.xml:

mapreduce.framework.name yarn

core-site.xml:

fs.defaultFS hdfs://master hadoop.tmp.dir /opt/bigdata/hadoop/tmp ha.zookeeper.quorum master1:2181,master2:2181,slaver1:2181

hdfs-site.xml:

dfs.replication 2 dfs.namenode.name.dir file:///opt/bigdata/hadoop/dfs/name dfs.datanode.data.dir file:///opt/bigdata/hadoop/dfs/data dfs.webhdfs.enabled true dfs.nameservices master dfs.ha.namenodes.master nn1,nn2 dfs.namenode.rpc-address.master.nn1 master1:9000 dfs.namenode.rpc-address.master.nn2 master2:9000 dfs.namenode.http-address.master.nn1 master1:50070 dfs.namenode.http-address.master.nn2 master2:50070 dfs.namenode.servicerpc-address.master.nn1 master1:53310 dfs.namenode.servicerpc-address.master.nn2 master2:53310 dfs.namenode.shared.edits.dir qjournal://master1:8485;master2:8485;slaver1:8485/master dfs.journalnode.edits.dir /opt/bigdata/hadoop/dfs/jndata dfs.ha.automatic-failover.enabled true dfs.client.failover.proxy.provider.master org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider dfs.ha.fencing.methods sshfence shell(/bin/true) dfs.ha.fencing.ssh.private-key-files /root/.ssh/id_rsa dfs.ha.fencing.ssh.connect-timeout 3000

yarn-site.xml:

yarn.resourcemanager.ha.enabled true yarn.resourcemanager.ha.automatic-failover.enabled true yarn.resourcemanager.ha.automatic-failover.embedded true yarn.resourcemanager.cluster-id yrc yarn.resourcemanager.ha.rm-ids rm1,rm2 yarn.resourcemanager.hostname.rm1 master1 yarn.resourcemanager.hostname.rm2 master2 yarn.resourcemanager.ha.id rm1 If we want to launch more than one RM in single node, we need this configuration yarn.resourcemanager.recovery.enabled true yarn.resourcemanager.store.class org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore yarn.resourcemanager.zk-address master1:2181,master2:2181,slaver1:2181 yarn.nodemanager.aux-services mapreduce_shuffle

2:启动服务,测试NameNode的自动切换

PS:一定要注意启动的顺序,否则会出现各种各样的错误,亲测

每台机器上启动Zookeeper:bin/zkServer.sh start

zookeeper集群格式化(任意一个主节点上执行即可):bin/hdfs zkfc -formatZK

每台机器上启动 journalnode:sbin/hadoop-daemon.sh start journalnode (如果这里不启动的话,在进行hdfs格式化的时候就会报错,同时这个进程只需在格式化的时候启动,后续启动服务则不需要)

hdfs集群格式化(master1上进行):bin/hadoop namenode -format

看到 “0” 表示成功了

master1机器上启动服务:sbin/start-dfs.sh sbin/start-yarn.sh

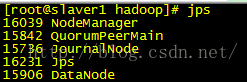

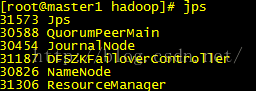

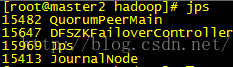

执行jps查看进行如下(master1,master2,slaver1):

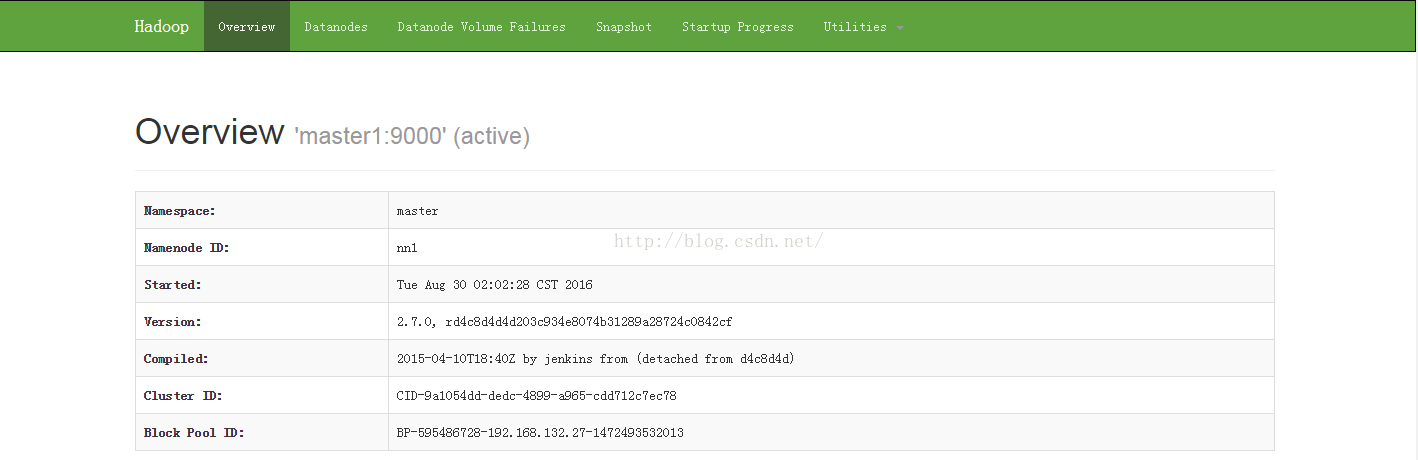

master1(192.168.132.27)的web界面显示如下:

备用NN同步主NN的元数据信息(master2上执行): bin/hdfs namenode -bootstrapStandby

启动备用NN(master2上执行): sbin/hadoop-daemon.sh start namenode

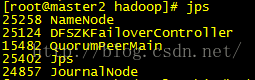

执行jps(master2上执行):

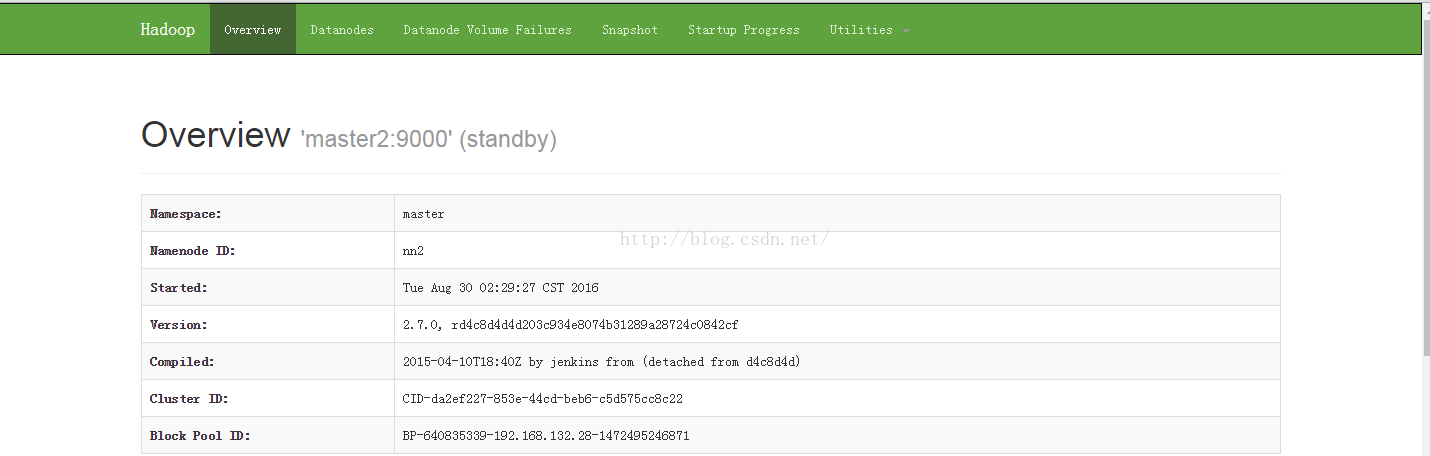

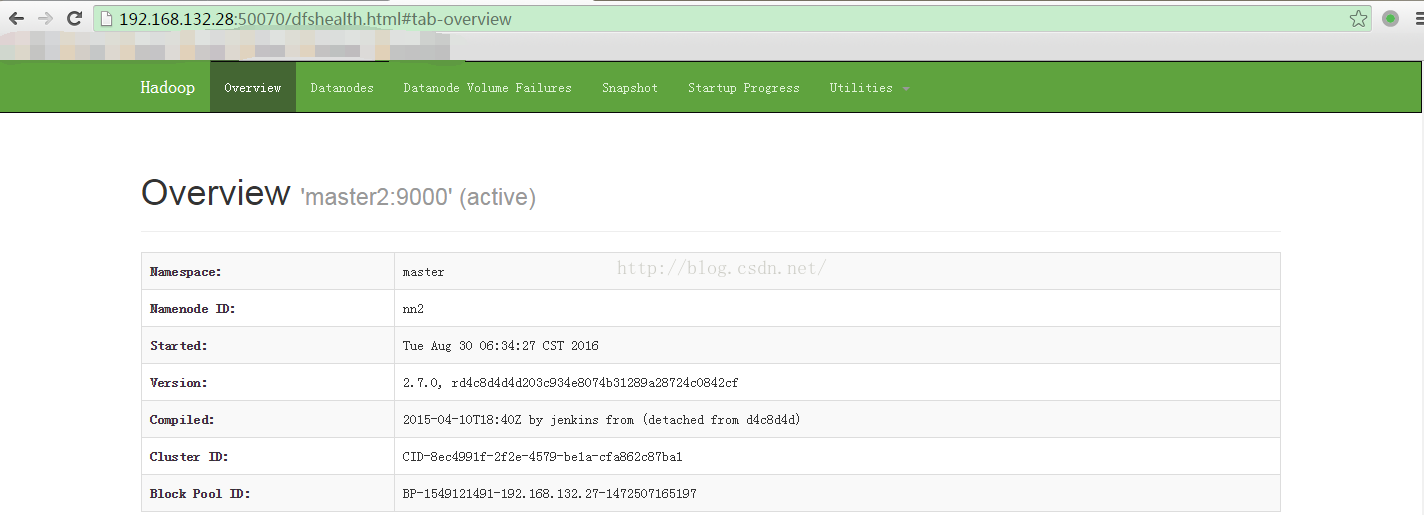

Web访问:

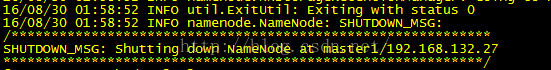

测试主NN和备用NN的切换:kill掉主NN进程 kill namenode_id

再次刷新master2对应的web,实现自动切换:

3:测试Resourcemanager自动切换

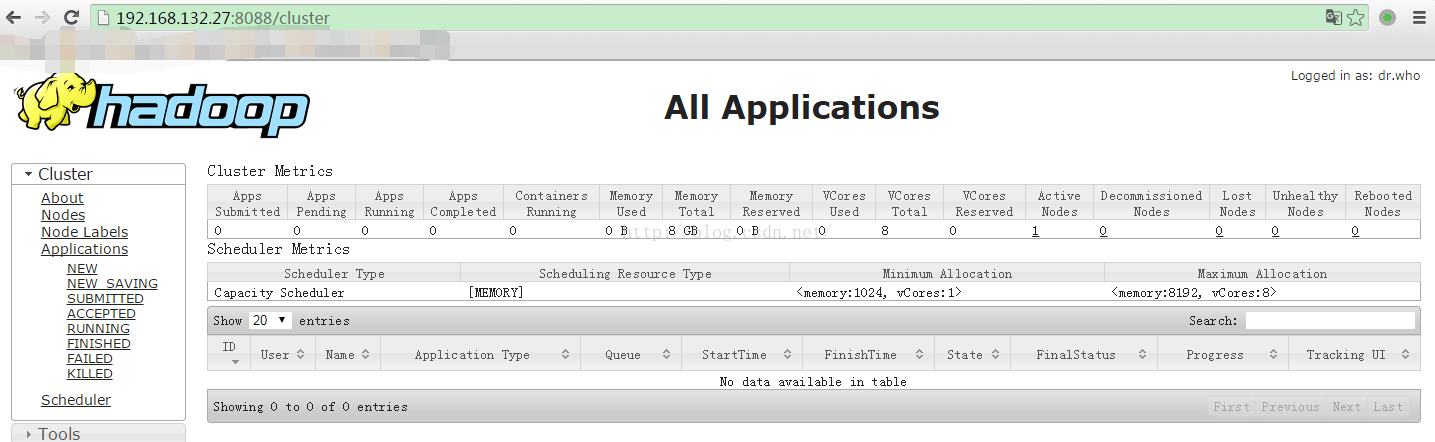

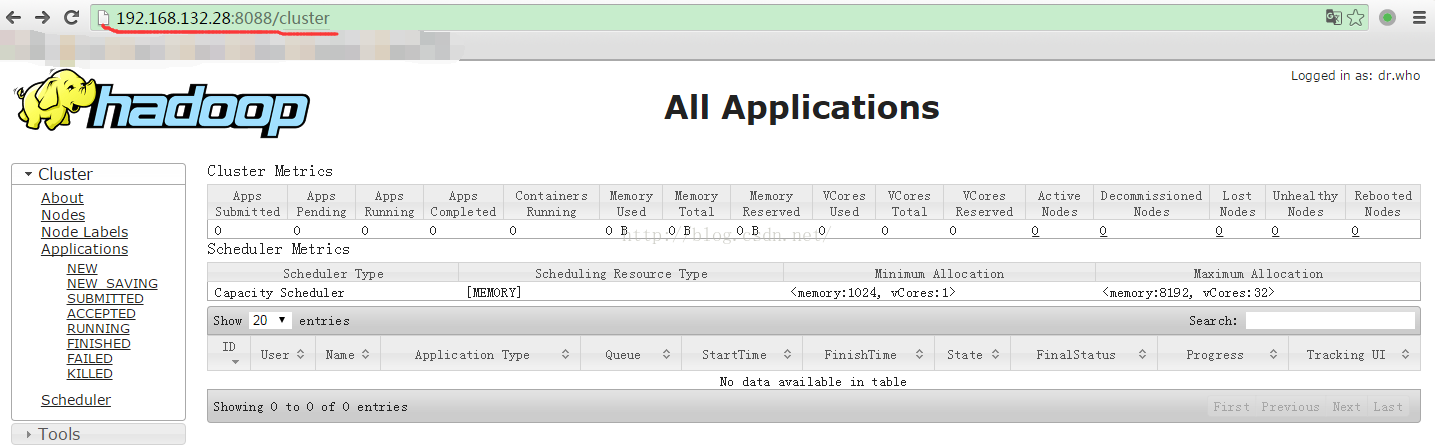

访问主NN的8088端口如下:

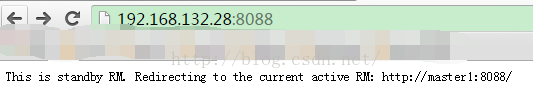

备用NN的8088端口:

kill 掉主NN的resourcemanager服务再次访问从NN的8088端口

OK!大功告成

四:遇见的问题

1:NameNode格式化失败

错误:failed on connection exception: java.net.ConnectException: Connection refused

解决办法:先启动Zookeeper集群,在用sbin/hadoop-daemon.sh start journalnode 启动各个NameNode上的JournalNode进程,然后再进行格式化

该错误参考博客:http://blog.csdn.net/u014729236/article/details/44944773

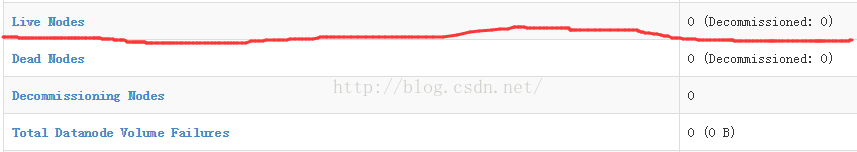

2:Web显示live nodes 为 0

解决办法:注释掉机子上 hosts文件中的原本的两行

3:master2的NameNode和 ResourceManager不能启动

2016-08-30 06:10:57,558 INFO org.apache.hadoop.http.HttpServer2: HttpServer.start() threw a non Bind IOExceptionjava.net.BindException: Port in use: master1:8088 at org.apache.hadoop.http.HttpServer2.openListeners(HttpServer2.java:919) at org.apache.hadoop.http.HttpServer2.start(HttpServer2.java:856) at org.apache.hadoop.yarn.webapp.WebApps$Builder.start(WebApps.java:274) at org.apache.hadoop.yarn.server.resourcemanager.ResourceManager.startWepApp(ResourceManager.java:974) at org.apache.hadoop.yarn.server.resourcemanager.ResourceManager.serviceStart(ResourceManager.java:1074) at org.apache.hadoop.service.AbstractService.start(AbstractService.java:193) at org.apache.hadoop.yarn.server.resourcemanager.ResourceManager.main(ResourceManager.java:1208)Caused by: java.net.BindException: Cannot assign requested address at sun.nio.ch.Net.bind0(Native Method) at sun.nio.ch.Net.bind(Net.java:444) at sun.nio.ch.Net.bind(Net.java:436) at sun.nio.ch.ServerSocketChannelImpl.bind(ServerSocketChannelImpl.java:214) at sun.nio.ch.ServerSocketAdaptor.bind(ServerSocketAdaptor.java:74) at org.mortbay.jetty.nio.SelectChannelConnector.open(SelectChannelConnector.java:216) at org.apache.hadoop.http.HttpServer2.openListeners(HttpServer2.java:914) ... 6 more端口被占用了,这时候要修改yarn-site.xml 中

此时再次启动OKyarn.resourcemanager.ha.id rm2 If we want to launch more than one RM in single node, we need this configuration

4:NameNode不能自动切换

dfs.ha.fencing.methods shell(/bin/true)